Search This Blog

Notes from using tech in the real world - dev, ops, architecture, data science, teams ... and some random stuff

Posts

Showing posts from 2012

KahaDB log files not clearing ActiveMQ

- Get link

- Other Apps

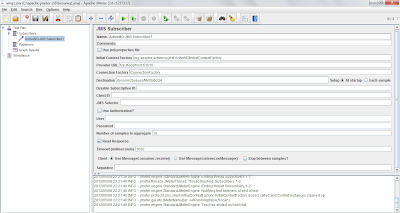

Performance Improvements in ActiveMQ

- Get link

- Other Apps